Building CI/CD Pipelines for .NET Core on Github Actions

Shipping features fast requires confidence and nothing builds confidence like a robust CI/CD pipeline. For .Net Core teams, Github Actions provides a powerful, integrated platform to automate everything from code commit to deployments.

This guide walks through building a mature, efficient pipeline for .NET Core including intelligent caching, zero-downtime deployments and security best practices.

Architecting for Speed and Reliability

A pipeline should do more than just build and deploy, it should optimize for feedback time and resilience. I'll break this down into three (3) core phases:

- Intelligent detection: It determines what changed to avoid unnecessary builds.

- Optimized Build & Package: Compiles code, run tests and creates artifacts (d0cker images in our scenario) as efficiently as possible.

- Zero-Downtime deployments: Safely release new versions without interruptions.

Laying the Groundwork

name: .NET Core CI/CD Pipeline

on:

push:

branches: [ master, develop ]

pull_request:

branches: [ master ]

env:

REGISTRY: ghcr.io

DOTNET_VERSION: '8.0.x'

IMAGE_PREFIX: organization-nameThis configuration ensures every push to a main branch triggers a deployment and every pull request is validated, maintaining code quality.

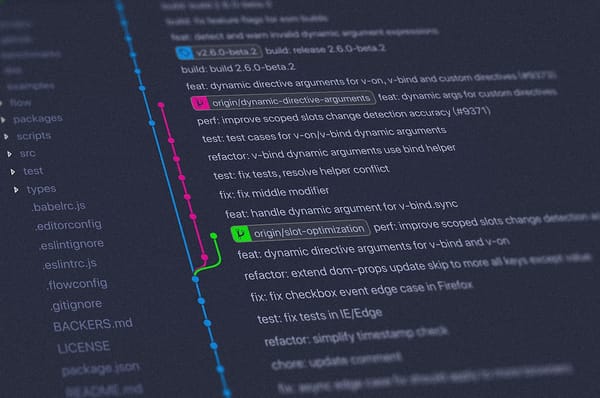

Phase 1: Smart Change Detection

One of the most powerful optimizations you can implement is selective building based on changed files. This dramatically reduces build times in monorepo architectures or microservice projects .

This job uses the dorny/paths-filter action to detect which parts of your application have changed, setting outputs for subsequent jobs.

jobs:

detect-changes:

runs-on: ubuntu-latest

outputs:

api_changed: ${{ steps.filter.outputs.api }}

worker_changed: ${{ steps.filter.outputs.worker }}

shared_changed: ${{ steps.filter.outputs.shared }}

steps:

- name: Checkout repository

uses: actions/checkout@v3

- name: Check for changes

uses: dorny/paths-filter@v2

id: filter

with:

filters: |

shared:

- 'MyApp.Core/**'

- 'MyApp.Infrastructure/**'

- 'MyApp.Shared/**'

- '*.Dockerfile'

api:

- 'MyApp.Api/**'

- 'Api.Dockerfile'

worker:

- 'MyApp.Worker/**'

- 'Worker.Dockerfile'The logic is quite straightforward, both the API and Worker will be rebuilt if the shared code changes. If only the API changes, only the API will be rebuilt. This can cut build times significantly.

Phase 2: Building Efficient .NET Core Docker Images

A well-structured Dockerfile is key to fast, secure builds. We use a multi-stage build to keep the final image lean.

FROM mcr.microsoft.com/dotnet/aspnet:8.0 AS base

WORKDIR /app

EXPOSE 80

EXPOSE 443

FROM mcr.microsoft.com/dotnet/sdk:8.0 AS build

# Optimize Docker layer caching

ENV DOTNET_CLI_TELEMETRY_OPTOUT=1

ENV DOTNET_SKIP_FIRST_TIME_EXPERIENCE=1

ENV DOTNET_NOLOGO=true

WORKDIR /src

COPY ["MyApp.Api/MyApp.Api.csproj", "MyApp.Api/"]

COPY ["MyApp.Core/MyApp.Core.csproj", "MyApp.Core/"]

COPY ["MyApp.Infrastructure/MyApp.Infrastructure.csproj", "MyApp.Infrastructure/"]

RUN dotnet restore "MyApp.Api/MyApp.Api.csproj"

COPY . .

WORKDIR "/src/MyApp.Api"

FROM build AS publish

RUN dotnet publish "MyApp.Api.csproj" \

-c Release \

-o /app/publish \

/p:UseAppHost=false \

--no-restore

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENV ASPNETCORE_URLS=http://+:80

ENV ASPNETCORE_HTTP_PORTS=80

ENTRYPOINT ["dotnet", "MyApp.Api.dll"]Key Optimizations:

- Layer Caching: By copying

csprojfiles and runningdotnet restorefirst, we create a Docker layer that can be reused as long as dependencies don't change. - Multi-Stage Build: The final image contains only the runtime and the published application, not the sdk or the intermediate build files, drastically reducing its size and attack surface.

Orchestrating Parallel Builds

Now, we use the change detection outputs and a build metric to compile only what's necessary, in parallel.

build-and-push:

needs: detect-changes

runs-on: ubuntu-latest

if: |

needs.detect-changes.outputs.api_changed == 'true' ||

needs.detect-changes.outputs.worker_changed == 'true' ||

needs.detect-changes.outputs.shared_changed == 'true'

strategy:

matrix:

service:

- name: api

image_name: myapp-api

dockerfile: ./Api.Dockerfile

condition: ${{ needs.detect-changes.outputs.api_changed == 'true' || needs.detect-changes.outputs.shared_changed == 'true' }}

- name: worker

image_name: myapp-worker

dockerfile: ./Worker.Dockerfile

condition: ${{ needs.detect-changes.outputs.worker_changed == 'true' || needs.detect-changes.outputs.shared_changed == 'true' }}

fail-fast: false

permissions:

contents: read

packages: writeSetting fail-fast: false is crucial. If the API build fails, the worker build will still process, giving your team a complete picture of the build health.

Pushing Images with Advanced Caching

This is where we turn a good pipeline into a great one. Using BuildKit and registry caching, we can make rebuilds incredibly fast.

steps:

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v2

- name: Log in to GitHub Container Registry

uses: docker/login-action@v2

with:

registry: ${{ env.REGISTRY }}

username: ${{ github.actor }}

password: ${{ secrets.GITHUB_TOKEN }}

- name: Extract metadata

id: meta

uses: docker/metadata-action@v4

with:

images: ${{ env.REGISTRY }}/${{ env.IMAGE_PREFIX }}/${{ matrix.service.image_name }}

tags: |

type=ref,event=branch

type=sha,prefix={{branch}}-

type=semver,pattern={{version}}

type=raw,value=latest,enable={{is_default_branch}}

- name: Build and push Docker image

uses: docker/build-push-action@v4

with:

context: .

file: ${{ matrix.service.dockerfile }}

push: true

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

cache-from: type=registry,ref=${{ env.REGISTRY }}/${{ env.IMAGE_PREFIX }}/${{ matrix.service.image_name }}:buildcache

cache-to: type=registry,ref=${{ env.REGISTRY }}/${{ env.IMAGE_PREFIX }}/${{ matrix.service.image_name }}:buildcache,mode=max

build-args: |

BUILDKIT_INLINE_CACHE=1The cache-from and cache-to directives use the container registry itself as a cache. This means even builds running on a completely fresh runner can pull in cached layers from previous runs, slashing build times.

Phase 3: Zero-Downtime Deployment

The final step is deploying without interruptions. Using Docker Compose over SSH provides a simple yet effective strategy.

deploy:

runs-on: ubuntu-latest

needs: build-and-push

environment: production

steps:

- name: Deploy via SSH

uses: appleboy/ssh-action@master

with:

host: ${{ secrets.DEPLOY_HOST }}

username: ${{ secrets.DEPLOY_USER }}

key: ${{ secrets.SSH_PRIVATE_KEY }}

script: |

cd /opt/myapp

docker compose pull myapp_api myapp_worker

docker compose up -d \

--no-deps \

--force-recreate \

myapp_api myapp_worker

docker image prune -fThe --force-recreate flag is essential. It ensures Docker uses newly pulled latest image instead of the old one it has cached locally. --no-deps prevents unnecessary recreation of dependent services.

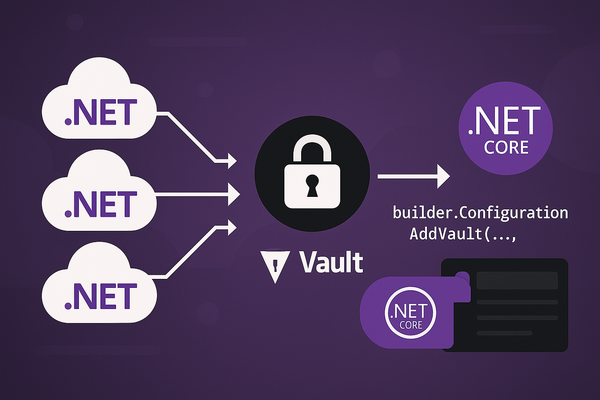

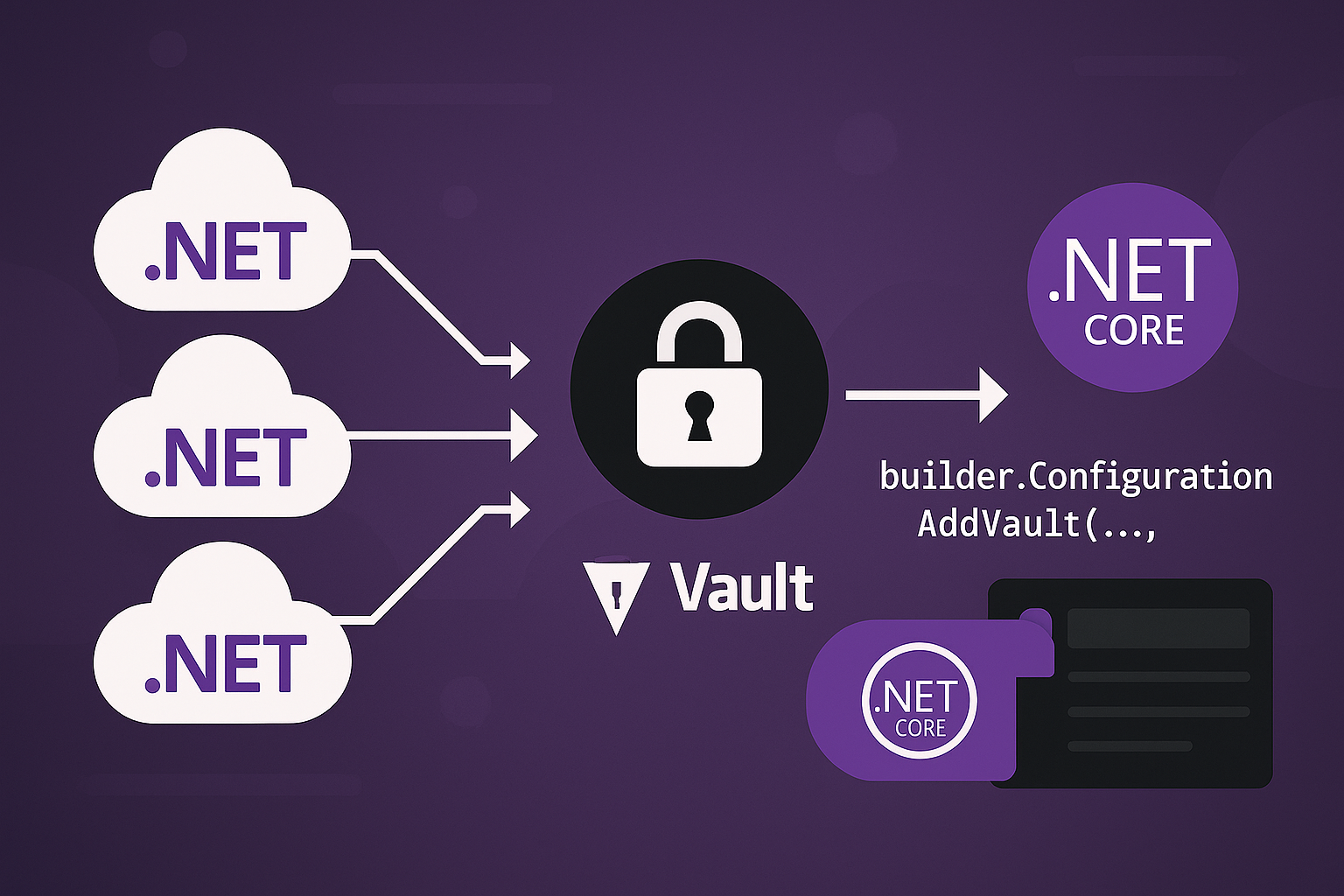

Securing you Pipeline

Security cannot be an afterthought

- Secrets Management: Never hardcode secrets. Use Github Secrets for sensitive data like connection strings and API Keys. For even better secrets management, you can integrate with a vault like Hashiscorp vault (checkout my post on Hashicorp Vault)

- Lease Privilege Permissions: Explicitly set narrow permissions in your workflow to limit the damage from a potential compromise.

permissions:

contents: read

packages: write- Testing Integration: A complete CI/CD pipeline includes automated testing

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Setup .NET

uses: actions/setup-dotnet@v3

with:

dotnet-version: ${{ env.DOTNET_VERSION }}

- name: Restore dependencies

run: dotnet restore

- name: Build

run: dotnet build --no-restore --configuration Release

- name: Run unit tests

run: dotnet test --no-build --verbosity normal --configuration Release --collect:"XPlat Code Coverage"

- name: Upload coverage reports

uses: codecov/codecov-action@v3

with:

files: '**/coverage.cobertura.xml'

fail_ci_if_error: true- Monitoring and Notifications: Keep your team informed about deployment status

- name: Notify deployment status

if: always()

uses: 8398a7/action-slack@v3

with:

status: ${{ job.status }}

text: |

Deployment to production: ${{ job.status }}

Commit: ${{ github.sha }}

Author: ${{ github.actor }}

webhook_url: ${{ secrets.SLACK_WEBHOOK }}